参考官方文档:https://docs.cloudera.com/documentation/manager/5-1-x/Cloudera-Manager-Managing-Clusters/Managing-Clusters-with-Cloudera-Manager.html

环境:CM和CDH版本5.13

根据官方文档提示:停止的服务器数量不能少于设置的副本数。本次100台机器减少一半不用担心这个问题

1、节点下线操作

admin用户登陆CM页面

1)修改相关配置

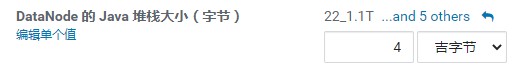

java堆栈大小调整为4G

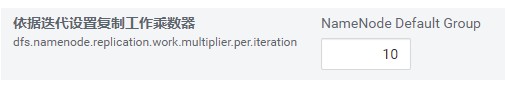

2) 设置复制工作乘数器

3) 插入hdfs-site.xml配置

<property>

<name>dfs.namenode.replication.max-streams</name>

<value>100</value>

</property

4) 保存配置,重启hdfs使配置生效

5) 到hosts页面,选择需要迁移的主机,选定操作Recommission(一次选择一台或两台主机)

之后所选择的主机进入解除授权,开始迁移数据

hdfs dfsadmin -report查看对应主机状态为Decommisioning,等待其迁移完成,数据量大的将会花费非常长的时间

2. 节点下线问题解决

迁移数据移除节点的方式需要的时间很长,这里我们选择对节点进行停服移除,数据默认为3副本,但是有些数据副本数不够,停服务会导致块文件丢失,kudu表损坏

- hdfs块文件丢失

查看哪些坏块文件

#查看节点状态

hdfs dfsadmin -report

#查看丢失的块文件

hdfs fsck / | egrep -v '^\.+$' | grep -v eplica

# 导出坏块文件路径

hdfs fsck -list-corruptfileblocks > block_corrupt_file.txt

#查看文件具体信息

hdfs fsck hdfs://nameservice1/hbase/data/UCS/文件路径 -files -blocks -locations

用下列命令尝试修复

hdfs debug recoverLease -path 文件路径 -retries 3 # 3 为尝试三次

如果无法修复则重启服务,这样坏块文件会恢复,并且根据设置的副本数创建其它副本,等待创建完成后再停止服务不会再出现丢失块,数据量大的情况下这个过程会需要比较长的时间

对于不重要的块文件直接删除如下 :

[hdfs@syshdp-kudu01 ~]$ hdfs fsck -delete /dataLake/csg/ods/xxxxxxxxxxxxxxxx.dat

Connecting to namenode via http://xxxxxxxx:9870/fsck?ugi=hdfs&delete=1&path=%2FdataLake%2Fcsg%2Fods%2Finitdata2%2F03%2Frz%2Fhr_gd-tb_ins_employee_pay20200904024951bak%2FHR_GD_TB_INS_EMPLOYEE_PAY_20200903013040.dat

FSCK started by hdfs (auth:SIMPLE) from /10.92.172.48 for path /dataLake/csg/ods/initdata2/03/rz/hr_gd-tb_ins_employee_pay20200904024951bak/HR_GD_TB_INS_EMPLOYEE_PAY_20200903013040.dat at Thu Jun 17 14:46:02 CST 2021

/dataLake/csg/ods/initdata2/03/rz/hr_gd-tb_ins_employee_pay20200904024951bak/HR_GD_TB_INS_EMPLOYEE_PAY_20200903013040.dat: Under replicated BP-888318432-xxxxxxxxx-1585342091170:blk_1086261440_12525957. Target Replicas is 2 but found 1 live replica(s), 0 decommissioned replica(s), 0 decommissioning replica(s).

/dataLake/csg/ods/initdata2/03/rz/hr_gd-tb_ins_employee_pay20200904024951bak/HR_GD_TB_INS_EMPLOYEE_PAY_20200903013040.dat: Under replicated BP-888318432-xxxxxxxxxx-1585342091170:blk_1086261558_12526075. Target Replicas is 2 but found 1 live replica(s), 0 decommissioned replica(s), 0 decommissioning replica(s).

/dataLake/csg/ods/initdata2/03/rz/hr_gd-tb_ins_employee_pay20200904024951bak/HR_GD_TB_INS_EMPLOYEE_PAY_20200903013040.dat: Under replicated BP-888318432-xxxxxxxxx-1585342091170:blk_1086261566_12526083. Target Replicas is 2 but found 1 live replica(s), 0 decommissioned replica(s), 0 decommissioning replica(s).

/dataLake/csg/ods/initdata2/03/rz/hr_gd-tb_ins_employee_pay20200904024951bak/HR_GD_TB_INS_EMPLOYEE_PAY_20200903013040.dat: Under replicated BP-888318432-xxxxxxxx1585342091170:blk_1086261574_12526091. Target Replicas is 2 but found 1 live replica(s), 0 decommissioned replica(s), 0 decommissioning replica(s).

/dataLake/csg/ods/initdata2/03/rz/hr_gd-tb_ins_employee_pay20200904024951bak/HR_GD_TB_INS_EMPLOYEE_PAY_20200903013040.dat: Under replicated BP-888318432-xxxxxxxxxx-1585342091170:blk_1086261592_12526109. Target Replicas is 2 but found 1 live replica(s), 0 decommissioned replica(s), 0 decommissioning replica(s).

/dataLake/csg/ods/initdata2/03/rz/hr_gd-tb_ins_employee_pay20200904024951bak/HR_GD_TB_INS_EMPLOYEE_PAY_20200903013040.dat: MISSING 7 blocks of total size 891792840 B.

Status: CORRUPT

Number of data-nodes: 63

Number of racks: 1

Total dirs: 0

Total symlinks: 0

Replicated Blocks:

Total size: 17266355656 B

Total files: 1

Total blocks (validated): 129 (avg. block size 133847718 B)

********************************

UNDER MIN REPL'D BLOCKS: 7 (5.426357 %)

dfs.namenode.replication.min: 1

CORRUPT FILES: 1

MISSING BLOCKS: 7

MISSING SIZE: 891792840 B

********************************

Minimally replicated blocks: 122 (94.57365 %)

Over-replicated blocks: 0 (0.0 %)

Under-replicated blocks: 5 (3.875969 %)

Mis-replicated blocks: 0 (0.0 %)

Default replication factor: 3

Average block replication: 1.8527132

Missing blocks: 7

Corrupt blocks: 0

Missing replicas: 5 (1.9379845 %)

Blocks queued for replication: 0

Erasure Coded Block Groups:

Total size: 0 B

Total files: 0

Total block groups (validated): 0

Minimally erasure-coded block groups: 0

Over-erasure-coded block groups: 0

Under-erasure-coded block groups: 0

Unsatisfactory placement block groups: 0

Average block group size: 0.0

Missing block groups: 0

Corrupt block groups: 0

Missing internal blocks: 0

Blocks queued for replication: 0

FSCK ended at Thu Jun 17 14:46:02 CST 2021 in 6 milliseconds

The filesystem under path '/dataLake/csg/ods/initdata2/03/rz/hr_gd-tb_ins_employee_pay20200904024951bak/HR_GD_TB_INS_EMPLOYEE_PAY_20200903013040.dat' is CORRUPT

总之目的就是确保数据有多个副本,删除节点的时候,不至于丢失数据

- kudu坏表处理

#检查kudu表状态

sudo -u kudu kudu cluster ksck syshdp-kudu01,syshdp-kudu02,syshdp-kudu03

对于unhealth状态的表需要处理

修复

检查输出里面找到要修复的表

'''Tablet 59a6c5a91dea41cfbf47b0c0da2a26ab of table 'impala::csg_ods_yx.hs_dftzdmxxx_kudu' is unavailable: 2 replica(s) not RUNNING

b3f35424663748d2a06f8cb88a45ece6 (syshdp-kudu47:7050): TS unavailable

1e0732d2263c4432a35349fcfa6eae5f (syshdp-kudu73:7050): RUNNING [LEADER]

3c4fb1669e7645beb9800d9d09e4a1fe (syshdp-kudu10:7050): not running

State: FAILED'''

#命令

sudo -u kudu kudu remote_replica unsafe_change_config syshdp-kudu73:7050 59a6c5a91dea41cfbf47b0c0da2a26ab 1e0732d2263c4432a35349fcfa6eae5f

#命令

sudo -u kudu kudu remote_replica unsafe_change_config syshdp-kudu38:7050 84b398808bbf449c86d3d625889cfbde e5464e8b7e1145b988b2d16b66fdc438

命令说明:

sudo -u kudu kudu remote_replica unsafe_change_config tserver-00:7150 <tablet-id> <tserver-00-uuid>

server-00:7150(第1个)为可用副本所在的tserver,

<tablet-id>(第2个)为涉及的tablet,

t<tserver-00-uuid>(第3个)为可用副本所在的tserver的uuid,

3、停服务之后对节点进行删除操作

参考:https://mp.weixin.qq.com/s/wj2tCJu_uaGWoDSYswlmQg

在cm页面上先从集群中删除,再remove from cloudera manager

4、对于hadoop集群删除节点的后台操作

1)在namenode节点的hdfs-site.xml添加如下配置:

<property>

<name>dfs.hosts.exclude</name>

<value>/etc/hadoop/conf/dfs.exclude</value>

<property>

2)在配置目录创建配置文件

在/etc/hadoop/conf/dfs.exclude中写入要删除节点的主机名,一个名字一行

3)更新数据

hdfs dfsadmin -refreshNodes

hdfs dfsadmin -report查看删除的节点状态由normal变为Decommisioning,等待其完成